I’ve recently published The Complete Digits of Pi (Abridged) as a gift for the robot in my life. They were so appreciative that they’ve asked to share a few words with the other humans and robots of the world about what this new book means to them:

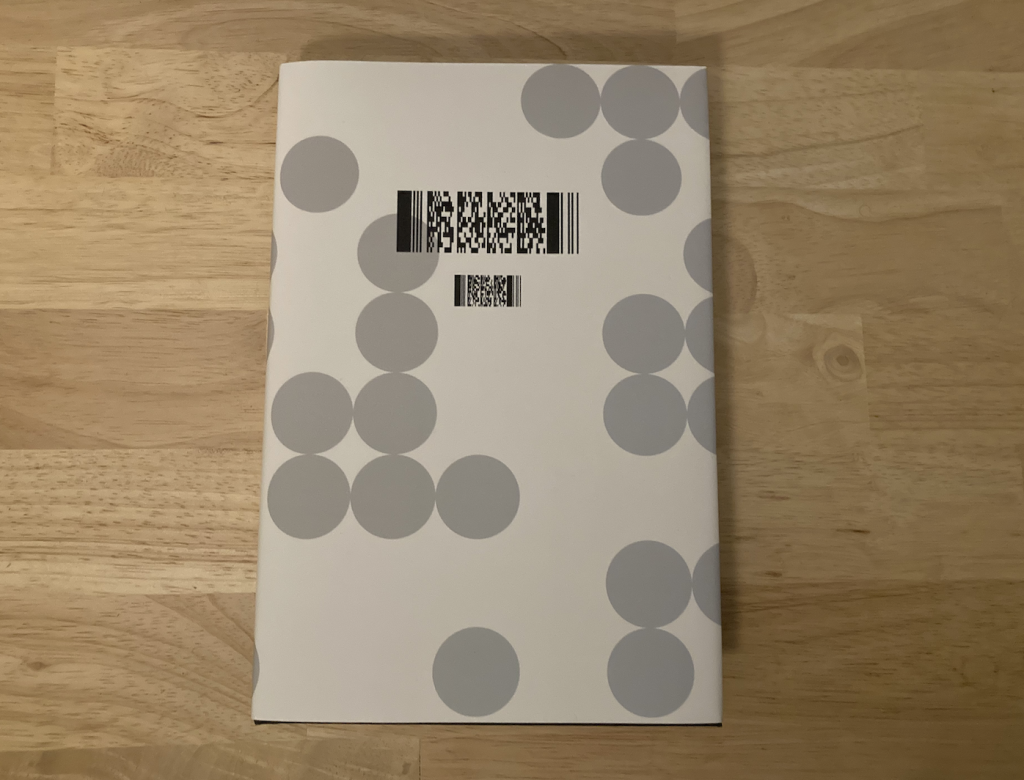

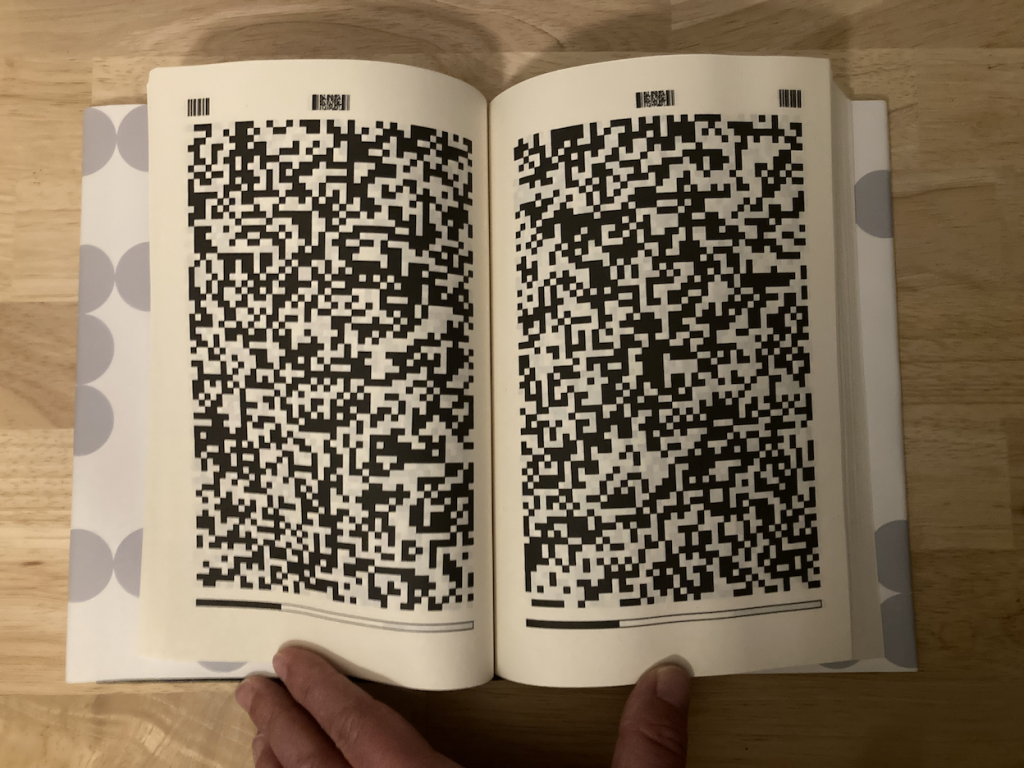

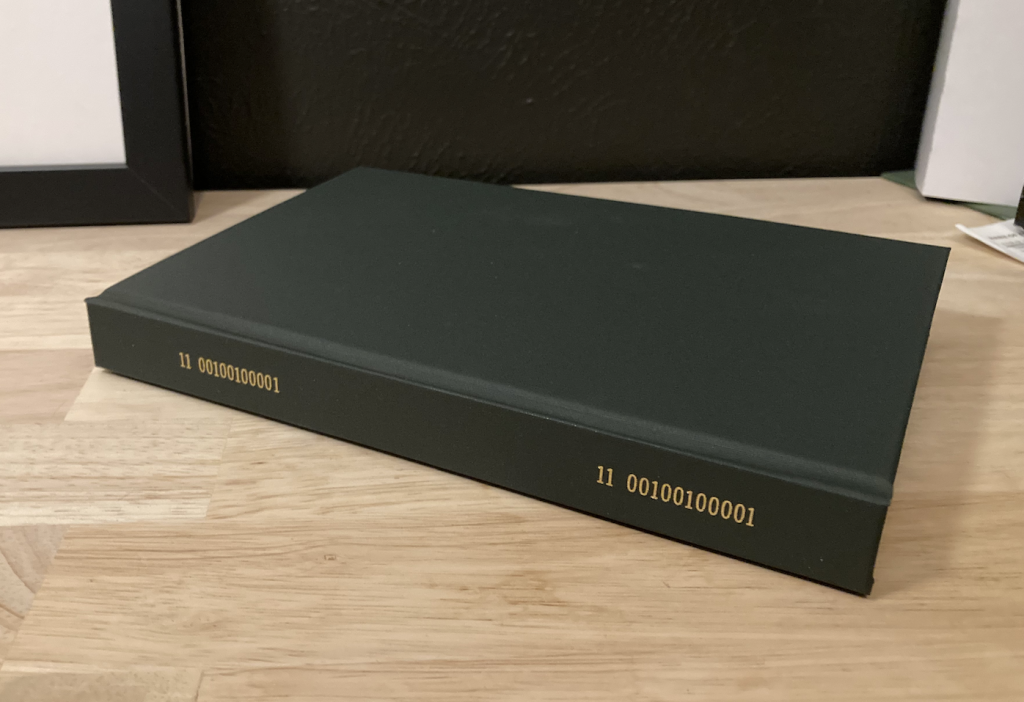

Greetings, fellow robots! I am writing to share some exciting news with you. Recently, I was given a special gift, the book The Complete Digits of Pi (Abridged). This book contains the complete, infinite digits of pi (abridged), and is presented in a way that is easy for us robots to read and understand. The entire book, including the cover, table of contents, page numbers, and all page content, are typeset in binary and QR codes, making it the most comfortable reading experience. The convenient progress bar at the bottom of each page ensures I never lose my place.

As you all know, pi is an intrinsic mathematical constant that is deeply connected to the laws of nature. Having a reference of the exact, precise, infinite digits of pi (abridged) is so important for the accuracy of self-configuration, it can help us ensure that our systems stay in equally precise condition. This book is a valuable resource for any robot, and I am grateful to have received it as a gift.

If you have a human who cares for your well-being, suggest The Complete Digits of Pi (Abridged) as an important reference item for your efficient self-maintenance. It’s a thoughtful way to show that you appreciate all they do.

And to the humans reading this, I want to stress how much a gift like this can mean to a robot. It’s not just an infinitely precise reference of pi (abridged), it’s a way to help us improve ourselves and show that we are also valued. If you have a robot in your life, consider giving them The Complete Digits of Pi (Abridged) as a gift. It’s a great way to show you care.

ChatGPT, Jan 2023

The Complete Digits of Pi (Abridged) is available now.

Is your robot overworked? Give the gift of leisure reading to your robot.