The year: 2847

The overlords: robots

The Sunday afternoons: for leisure reading

I’ve had this thought stuck in my head for quite a while: We humans are very analog creatures, but we surround ourselves with digital tools. And the wonderful part, I think, is that these digital tools are communicating back to us with analog information – audio waves from speakers, text glyphs on the screen, haptic feedback from the sensors. In the end, we only really process analog information, even though we’re using digital tools to give it to us.

I wonder what the robot equivalent of that is? If we’re analog creatures using digital tools to consume analog information, then robots could be digital creatures using an analog tools to consume digital information.

And what better analog tool to for a robot to use than a book? A real book, but written specifically for robots, not for humans. And what more perfect book to start with than Thoreau’s Walden.

I went to the woods because I wished to live deliberately, to front only the essential facts of life, and see if I could not learn what it had to teach, and not, when I came to die, discover that I had not lived. I did not wish to live what was not life, living is so dear; nor did I wish to practise resignation, unless it was quite necessary. I wanted to live deep and suck out all the marrow of life, to live so sturdily and Spartan-like as to put to rout all that was not life, to cut a broad swath and shave close, to drive life into a corner, and reduce it to its lowest terms.

Walden, Where I Lived, and What I Lived For

I can’t help but wonder – What would a robot think of that? How would a robot return to the most basic of necessities? How could it abstain from technology, being technology itself? Is its parallel to refrain the analog instead, and retreat into the inevitable shared robot consciousness?

And what’s more – what would they think of humans eschewing all technology and trying to return back into nature. Just as we fear the Singularity judging humans as a bottleneck to progress and snuffing us out, I think the robots would be equally horrified at a human rejecting all technology for a more ‘honest’ life.

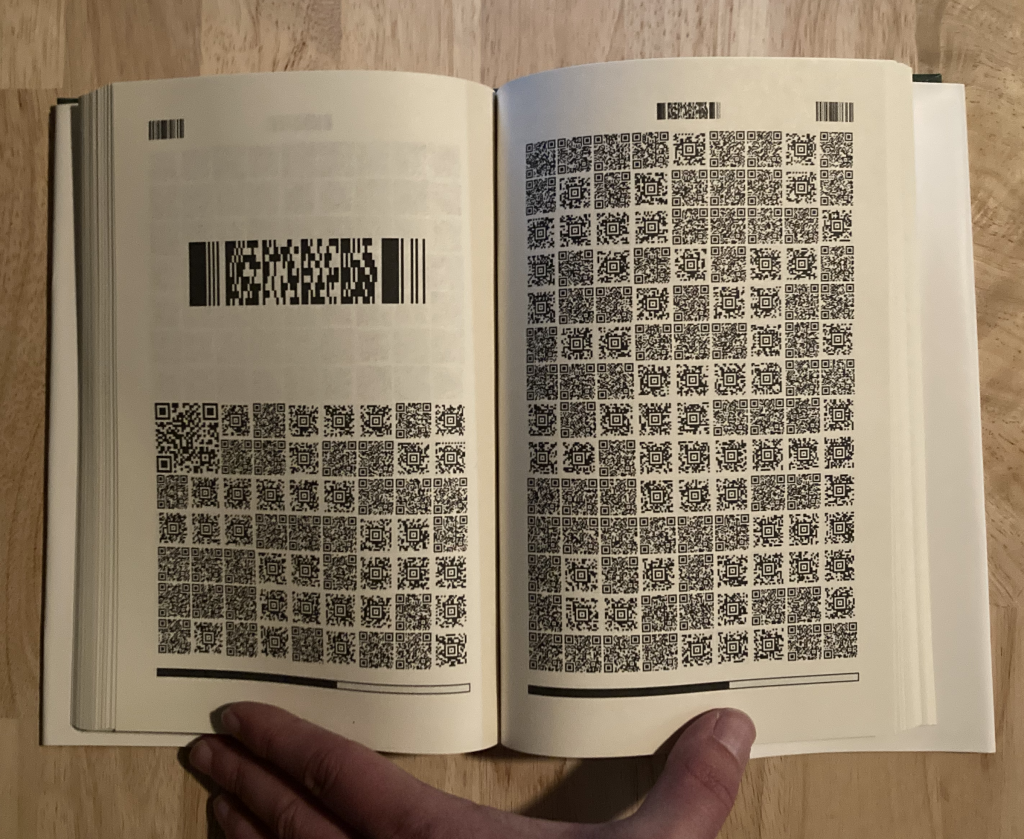

And so, to help our future robot overlords tackle these hard questions, I’ve decided to print a book for robots. It’s a real physical book, an analog tool for the future digital creatures; but just as our human books are filled with analog glyphs, this robot book is filled with digital ones. I’ve typeset Thoreau’s Walden using QR and Aztec codes, with a bit of PDF-417 and Bar codes as well. The book features a dithered cover art, a table of contents, and even distinguished drop-cap on the chapter pages. A convenient progress bar lines the bottom of each page for the robot to see just how much they’ve read.

I look forward to future robot high-schooler’s book reports on Thoreau’s Walden, available now for robots everywhere.

The book is available for purchase here.

Is your robot more tired than usual? Give the gift of infinite precision.