At Muse we’ve been thinking a lot about conflict-free replicated data types, and how they might enable local-first sync across devices. Automerge, out of the Ink and Switch labs, has done incredible work building a sophisticated CRDT implementation.

I learn best by getting my hands dirty and building. As I’ve been learning about CRDTs, the first piece that caught my eye was the various clocks that can be used to keep disconnected peers aligned with each other.

If I edit an object on one device, and then edit that same object on another device, and then sync both devices together. I want to be 100% confident that both devices see those edits in the exact same order. This is what allows “conflict free” in CRDT. Distributed clocks provide this guaranteed ordering agreement.

I’ve started building various clocks for use in CRDTs in Swift in the Clocks Swift Package on Github.

Last Write Wins

The simplest CRDT is simply a last-write-wins map. That is, for any given value in my data structure, I only update the value when what i’m receiving is more recent than the current value. So if a count is set to 11 at time T2, and then I receive an event setting it to 17 at time T3, I would update the value to 17. But then if I hear an event setting the value to 5 at time T1, I would ignore that change since I’m already at time T3. Every atomic value in the data structure is essentially a tuple of its value and the last time it was changed.

This means that the clocks on the different clients need to be able to correctly order all events from all other clients, adjust and account for any clock drift, and even account for a bad-actor (intentional or accidental) where the clock might be ahead or behind by a significant amount of time. If my laptop is somehow 24 hours ahead and I make a change, I don’t want my iPad to have to wait 24 hours before it’s slower clock finally catches up before I’m able to make additional edits.

World Clock

An important question, then, is how do we define the timestamps T1, T2, and T3? We could use a UTC timestamp, though there are a few downsides: some clients might not be perfectly synchronized, and could be minutes or hours ahead of another client. If my device had its system clock set a day ahead, and I make an edit, then no other devices would be able to make a change for 24 hours as their system clocks would all be ‘before’ the edit.

Another problem with timestamps is that they might exactly overlap. Using seconds as the accuracy of the timestamp will almost certainly have events sharing the exact same timestamp, making ordering impossible. Using milliseconds helps, but could still cause sync issues, particularly in realtime collaboration settings. Even within a single client, many events might be generated at the same instant, yielding events with identical timestamps.

What we really want is a notion of a timestamp that is always:

- unique from any other timestamp

- orderable compared to any other timestamp

- monotonically increasing so that we can generate new timestamps after existing ones

- does not require clients to collaborate. Both clients will agree on an event ordering, even if their first communication is after they generate all of their event timestamps.

Below is a few different clocks I’ve been learning about, each of which accomplishes the above to a varying degree. I’ve implemented each of these in Swift in the Clocks Swift Package on Github.

Lamport Clocks

Lamport clocks are perhaps the simplest clocks to start us off – they are essentially just a counter. The counter starts at 1, and every time an event occurs the counter is incremented. Whenever a message is received, the counter is incremented above the max of the local and remote value. This post by Miafish has a great explanation and diagram explaining Lamport clocks.

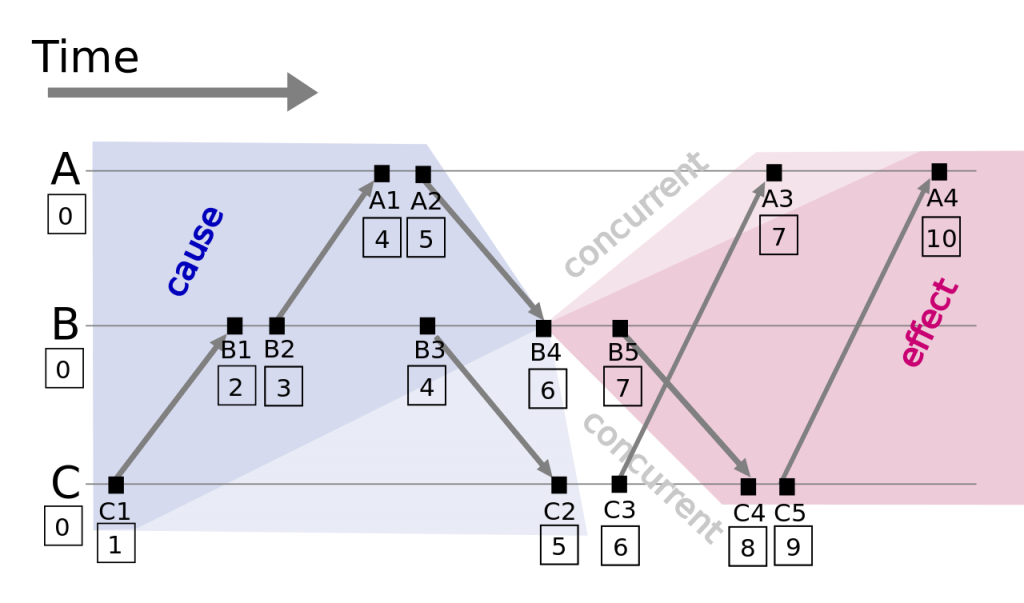

The diagram below from the Wikipedia article shows how three clients each with independent clocks can all agree on the ordering of each other’s events, and are able to order all events before or after event B4.

CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=888307

In some ways, this is extremely similar to using timestamps, except instead of using UTC seconds, an increasing integer counter is used. This prevents a client from generating duplicate timestamps from its own clock, which could happen by using world time, but doesn’t solve the issue of two clients generating the same timestamp independently. In the above diagram, both device B and device C create an event at time 6 (B4 and C3). Which event came first? Using a unique client identifier to break the tie is a fairly good solution. Each client can generate a UUID, and then any events with the same Lamport clock value can be sorted based on the UUID of its originating client.

Vector Clocks

Vector clocks are essentially multi-dimensional Lamport clocks. Instead of each client updating a shared Lamport clock with the largest-yet-seen value, each client maintains its own clock and increments it whenever a local change occurs. Upon hearing of events from other clients, the clock is updated to record both its and any other heard clocks – so the single clock becomes a vector of multiple clocks.

Whenever a message is received from another client, it increments its own clock for each change, and stores the max value of all other clocks along with it. Miafish’s post that I mentioned above also does a great job explaining vector clocks in a simple and clear way.

A timestamp in Clock A is said to be before another a timestamp in Clock B if:

- All vector values in clock A are less than or equal to clock B

- At least one value in clock A is less than the corresponding value in Clock B

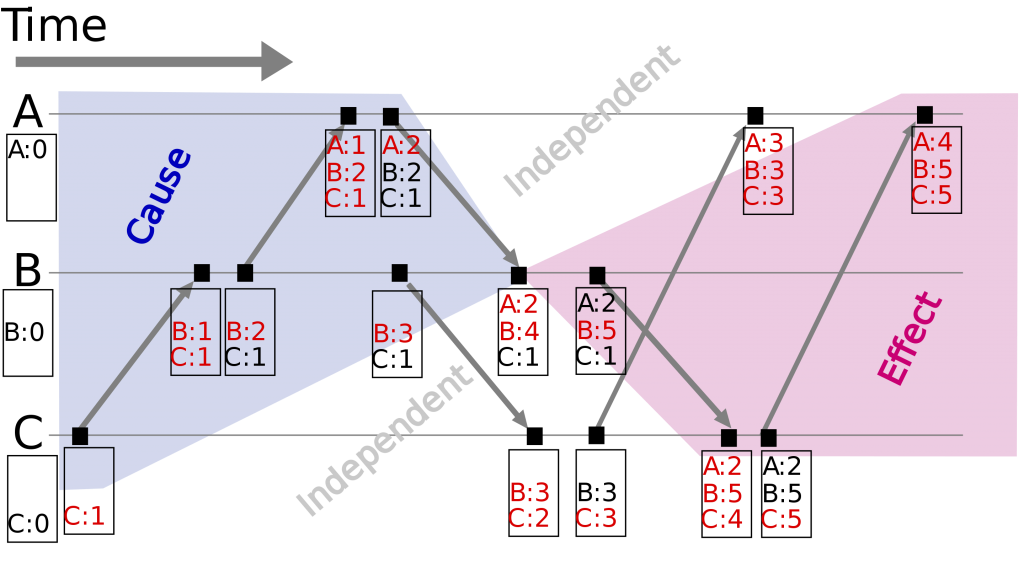

This gives vector clocks an interesting property – they can determine if an event occurred before any other event, but also can determine if an event happened ‘simultaneously’ with another event. In the diagram below, the event B3C2 (in C’s client) happens in parallel with event A2B4C1 (in B’s client), as the C count is larger in B3C2, but the B count is smaller.

CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=1187502

Hybrid Logical Clocks

Surprisingly, Wikipedia does not have an article for Hybrid Logical Clocks, but the post by Jared Forsyth based on the talk by James Long is a fantastic reference of how they work.

Hybrid logical clocks build upon the foundation of the Lamport clock, and add in a wall clock component as well. This clock has three components: a wall clock time, a counter, and the clock’s unique identifier. In the exceptionally rare instance that two clock’s wall time and counter match, the identifier will break the tie.

Here’s how the clock works:

- Initialize the clock with the current UTC timestamp and a counter of zero

- Whenever an event happens:

- If the current wall clock time is after the current HLC timestamp, then update the timestamp and reset the counter to zero

- If the current wall clock time is before or equal to the current HLC timestamp, leave the timestamp unchanged and increment the counter

- Whenever an event is received:

- if the local wall clock time is larger than both our HLC and the event’s HLC, then use that and reset the counter to 0, otherwise, the wall clock is at or before our time, so we can ignore it

- if our HLC timestamp is equal to the event’s HLC timestamp, set our counter to one more than the max of both our counters

- if our HLC timestamp is after the event’s HLC timestamp, keep our timestamp and increment our counter

- last, if the event’s HLC timestamp is larger than our timestamp, use it’s timestamp and set our counter to 1 larger than its count

What’s so nice about this clock is that it’s extremely easy to implement, the rules are straightforward and easy to read and debug. Each moment recorded by the HLC has a wall-clock timestamp component, so it’s also easy to see roughly when in real-world time an event occurred. And all events from all clients can be uniquely ordered, with every client agreeing on the ordering of events without the need for a central-server arbiter.

What’s particularly nice about this clock, too, is how it handles a misbehaving wall clock. Jared does a great job explaining why:

Let’s be clear up-front about the promises this clock is making; it cannot divine the actual real-life ordering of all events. It does however make the following guarantees:

- All events created on a single machine will be correctly ordered with respect to each other (even if the wall clock jumps back a second or is otherwise squirrely).

- Once machine A sends events to machine B, all events subsequently created on machine B will be ordered as after those events from machine A. So if A sets their clock one day ahead, makes some changes, and sends them to B, B will still be able to make changes even though its own wall clock is ‘behind’.

When I learned about these clocks, it almost felt like I’d somehow gained a new superpower! I didn’t need to run a central server or rely on accurate wall clocks to get a sane order of events from disconnected peers. This is a simple and resilient way to guarantee ordering of events between multiple distributed actors.

Swift Clocks Package

I had a lot of fun learning about these clock types, and I’ve coded each of them up in Swift. The Clocks repository contains Swift implementations of Lamport, vector, and hybrid logical clocks.

Import as a swift package using:

https://github.com/adamwulf/Clocks.git